CIKM2021

CIKM2021|Best Short Paper|Neuron Campaign for Intialization Guided by Informaiton Bottleneck Theory

words at the front

Hi, it’s so glad to introduce my first work in my undergraduate life. Fortunanetly, it won the best short paper award in CIKM2021. I am so exicited to get this award! So despite introducing our work, I would like to share the excitement about my great achievement. Also I think initialization strategy is an important part in training DNN while most of us ignore it and only use the default method given by Torch or Tensorflow. Thanks again to all the coauthors, Xu Chen, Lun Du, Qiang Fu, Shi Han, Dongmei Zhang. Special thanks to Bohan Wang who help me for doing some proofs.

Resource

PDF:Neuron Campaign for Initialization Guided by Information Bottleneck Theory Poster: Underline | Neuron Campaign for Initialization Guided by Information Bottleneck Theory Chinese Version: CIKM2021|Best Short Paper|基于Information Bottleneck理论的神经网络竞争初始化策略 - 知乎 (zhihu.com)

Why study the initialization strategy?

We need to go deeper! Nowadays, DNN has developed in an unexpected way. It is so fast that nothing can compare with it. As a result, in one hand, we can deal with more diffcult task with much better performance. However, it indeed suffers from a lot of problems. One of the hardest one is the difficulty of training (optimizing) a good neural network. And the key in how to train a good DNN is to fight against some drawback caused by backpropagation.

So the question should be raised at first: What is a good training procedure? The answer should be:

- no gradient explosion or gradient vanishment (nan in the training)

- converge fast

- good generalization ability

And there is a specific pheonmonon which we will talk abou in the next section.

Hard to understand? We will take an concrete example. Training a DNN model is just like cooking. How to choose a good optimizer (Adam or SGD)? How large the learning rate is? Whether use the regularization term? These thing are like condiment in cooking a delicious meal. Whether use sugar? How much sugar is good? These things matter to the training result of the DNN.

So as these paper are all important in the training, we take a specific one: Initialization strategy.

Another question is that: why the initialization so important? what is the result of a bad initialization?

We take an optimization landscape as an example.

Our target is to rearch the local minima, the bottom of the valley. Anmd if we just init at a bad position on the top of the maintain. It may be quite hard to find a suitable local minima. The initialization indeed mean a lot expecially in some cases when the task is difficult like BERT.

From the another perspective is that the weight of pretrain and meta learning can somehow be viewed as an initialization method. Learn more about the initalization method can also help us to understand the weight of pretrain or help the pretrain to achieve better performance.

The history of initialization

The initialization topic comes out when people find that the zero initialization is useless. As all neurons in the same layer share the same optimization procedure. It just compress the expression of the whole layer into a single neuron.

Then the random initialization with Gaussian distribution comes out. The concrete form is: \(W \sim N(0, 0.01^2)\) However, as the layer goes more deeper, this random initialization will cause the problem of gradient explosion and gradient vanishment.

gradient vanishment and gradient explosion

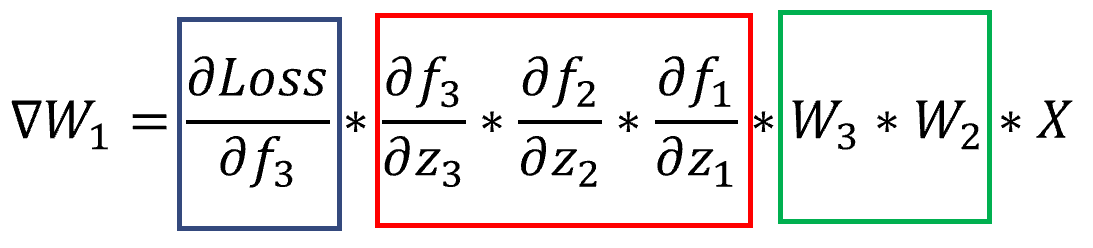

We take an concrete three layer MLP as an example to figure out how these problems come up.

The backpropagation gradient of the first layer in the three-layer MLP can be written as:

It is mainly component in three parts.

- The deviation of the loss function

- The deviation of the activation function of each layer

- The weights of the front layer

Consequently, computing a gradient needs multiple consecutively for six times. This gradient is really sensitive and will change in large scale. It will increase or decrease exponentially. Think about that, each term is equal to 0.1, the gradient will become almost 0.

Solution

Most of the initialization strategies aim to solve above problems. Here we introduce the most popular two: Xavier Initialzation and He Initialization. This is a only brief introduction. More things can be found in How to initialize deep neural networks? Xavier and Kaiming initialization • Pierre Ouannes (pouannes.github.io)

The initution of these methods are how to maintain the information flow in both forward and backward. To avoid the situation like gradient vanish, which nearly no information remain in the front layer. So the key is to keep the output variance of each layer the same.

Keep this in mind, we first share the Xavier Initialization, the concrete form is: \(W\sim U \left[ - \frac{\sqrt{6}}{\sqrt{n_j+n_{j+1}}}, \frac{\sqrt{6}}{\sqrt{n_j+n_{j+1}}} \right]\) where $n_i$ and $n_{i+1}$ are the input and output dimension of a particular layer, respectively.

Xavier can guide the variance the same both forward and backward with the following assumptions.

- linear activation function

- $f’(0)=0$

- The mean value of input and weights are all zero.

- The input $x$ and weight $W$ are i.i.d.

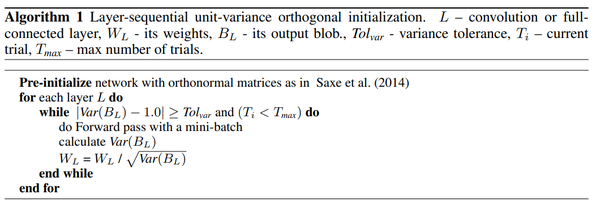

And Kaiming thought that the linear activation is not so practical use. So He initialization is specially designed for the ReLU activation function. \(W \sim N \left( 0, \sqrt{\frac{2}{n_j}} \right)\) however,these methods still have so many assumptions. A data driven method called LSUV is proposed in All YOU NEED IS A GOOD INIT. The key is to let the data output of each layer equal to 1.

The detailed algorithm are as follows:

The idea is quite straight forward:

- Sample some training data and do a simple forward on the first layer of the DNN.

- Calculate the variance of the output.

- If larger than 1, it will scale the weight of the first layer. For example, it the variance is $2$, then we divide $\sqrt{2}$, to keep the variance to 1

- Repeat the above on each layer.

In a word, the above methods can be summaried as the variance scaling initialization strategy, which care about how to control the variance of DNN to avoid the gradient vanish and explosion. However, they do not care about how it will fast the convergence speed or how to enhance the generalization ability. And those are partly reason for our paper.

A step deeper. Why such strange idea?

We will go into details in the motivation of this paper with some questions.

why we not study other component like optimizer but initialization strategy?

One reason is that everthing in the training needs to be differentiable. However, initialization is the first phase before training, which means we can use any method as we wish to achieve our goal.

What is the motivation for this idea?

This all comes from a pheonmenon we observed. In this experiment, we care about the change of weights on each iteration. The phenonmon is that the weights fluctuate a lot, sometimes positive, somtimes negative. It indicates that this system is indeed not stable. (Learning rate could also be problematic)

Then the question should be raised: How the initialization help to stabilization?

Thinking about an optimization landscape, the initialization may be a plain with no concrete direction of where to go. Then our model will jump from everwhere with no order.

Our solution is that can we locate in a good place at first which can help us to a good local minima directly to avoid the above pheonmenon.

Thus, the key of our paper is that:

- The initialization point should have some discriminative ability (closer to a local minima)

- guided by Information Bottleneck (The local minima is good)

Property of a good initialization: guided by Information Bottleneck Theory

In this section, we will first introduce the information bottleneck theory and recognize what good property of the initialization should have (related with Information Bottleneck Theory).

RIP, Naftali.

In about one week after the accecpt of this paper, I was sad to hear that Naftali Tishby, the founder of the Information Bottleneck, left this world forever. This theory has been long debated and I believe it’s a correct way to understand DNN.

He dances like a hummingbird in the wind and flies to the next life.

A brief introduction to Information Bottleneck Theory

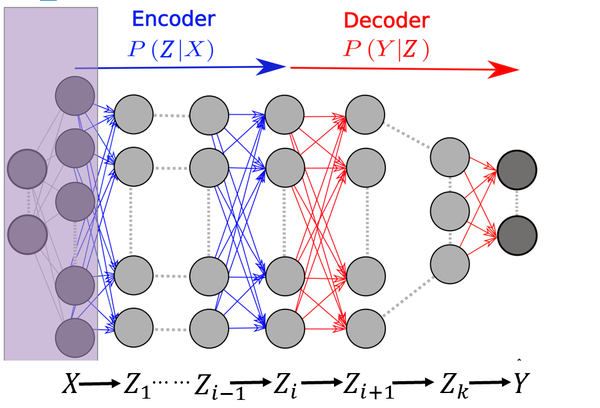

The information bottleneck theory is about how to observe the training procedure of DNN. It introduces two criterions. The mutual information between input $X$ and the hidden representation $Z$, $MI(X;Z)$. The mutual information between output $Y$ and the hidden representation $Z$, $MI(Z;Y)$. The experiments record the change of the two criterions

The findings are from two perspectives:

- From the perspective of different layers: $MI(Z;Y)$ will increase from the first layer(close to the input) to the last layer(close to the output) while the $MI(X;Z)$ will decrease.

So the front layer should care more about the input information maintenance while the rear layer should care more about the discriminative ability enhancement. - From the perspective of different epoches: At first, DNN will try to memory all the training data, thus both $MI(Z;Y)$ and $MI(X;Z)$ will increase. As the DNN memory most of the information, it will compress the unnesserary information in $X$, which means $MI(X;Z)$ will decrease to enhance the generalization ability. While at the same time, $MI(Z;Y)$ will increase continuously.

In this paper, our method is designed mainly based on the first bullet.

where the front layer is the encoder which cares more about the input information maintenance. The rear layer is the decoder which cares more about the discriminative ability enhancement.

Why use the Information Bottleneck Theory as the criterion?

Actually, our aim is only to increase the discriminative ability (similar with $MI(Z;Y)$). However, we find that though it can achieve much faster convergence, it suffers from the bad generalization ability (experiments can be found in the ablation study). Then we think of the information bottleneck theory which help us to have a good initialization point may also benefit the final generalization ability.

The criterion for each layer is \(\alpha MI(X;Z) + (1-\alpha) MI(Z;Y)\) In the front layer, the $\alpha$ should be large to maintain more input information, the opposite to the rear layer.

Simplication on the two criterions

Since the mutual information is hard to compute in practice (quite computational expensive), we do some simple approximate. (No proof here for better understanding, if you have any question, contact with me freely).

For $MI(X;Z)$:

-

It can be written as $MI(X;Z)=H(Z)-H(Z X)$ -

$H(Z X)=0$ as the DNN is a determinstic function. Their is no uncertainty - $H(Z) \propto std(Z)$ (Please think about the correlation with PCA)

For $MI(Z;Y)$, it can be simlified as: \(tr(\hat{H}\hat{H}^T)-\frac{1}{N}\sum_{j=0}^Ntr(\hat{Z}^T\Pi^j\hat{H})\) where $\mathbf{\Pi} = { \mathbf{\Pi}^j \in \mathbb{R}^{m \times m} }^N_{j=1}$ is a set of diagonal matrices whose diagonal entries encode the membership of the $m$ samples in $N$ classes. The diagonal entry $\mathbf{\Pi}^j_{i,i}=1$ indicates the $i_{th}$ sample belongs to the $j_{th}$ class.

Inituitively, the first term is the inter-class variance, while the second term is the intra-class variance. So here, we just enlarge the inter-class variance while minmizing the intra-class variance.

After we simplified the criterion, we go on the final step: how to get the initialization weight with such properties.

Neuron Campaign Algorithm: achieve any property you want.

I am so excited to introduce this part: the most impressive part in this paper. This section we mainly talk about how to achieve the initialization as it is hard to generate the weight directly.

Here we propose the Neuron Campaign Algorithm. I will first talk about some intuitions for you to better understanding.

We take a one layer MLP with the shape [784, 100] as a concrete example

- The basic understanding for one layer MLP is a combination of neurons (or perceptrons). So the example is a combination of 100 neurons.

- When we do the random initialization, there must be some good neurons and some bad neurons. (good or bad is determined by the criterion we use)

- As we cannot generate good neurons directly, but there always have some good neuron generate by random. So why not generate more neurons and select the good neurons among them? Generate 1000 neurons and select the best 100 neurons as the initialization.

- The intuition is just like it is hard to win the prize with 100 lottery tickets, but it is much easier to achieve it with 1000 lottery tickets.

So the procedure should be:

- pre-initialize with methods like Xavier or He Initialization [784, 1000]. (1000 is just the number of candidate neurons you want)

- selected the neurons with highest score on our properties

- Combine them as the initialization weights

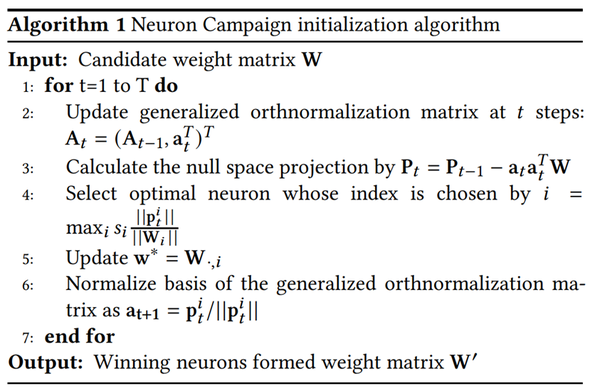

The details of this algorithm are shown as follows:

Notice that we also stress the importance of the diversity (orthogonality) of neurons. This is in case that the selected neurons to be too consistency which will hurt the optimization like the zero initialization.

The orthogonal constraint is that except for the highest score. The new neuron should also have large norm on the zero space of the selected neurons

Experiment

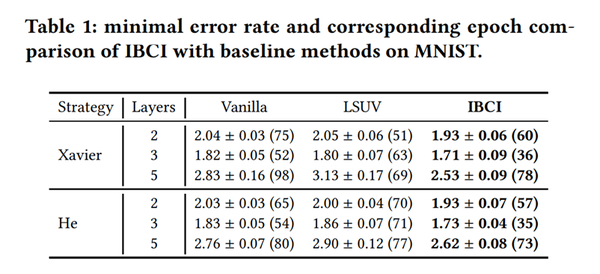

In the experimental section, we demonstrate the effectiveness of our initialization strategy on the MNIST dataset, and we evaluate our method on MLPs of different architectures. In the main results, we combine our algorithm with He initialization and Xavier initialization as the basic method for generating Neuron sets. The numbers in parentheses are the number of training rounds to achieve the best performance on the test set. the smaller the epoch, the faster the convergence rate. We can find that our algorithm shows good generalization performance and faster convergence on the most frequently used three-layer deep neural network.

Conclusion

In this paper, we mainly talk about two part:

- What is a good initialization: introduce the information bottleneck theory, increase the discriminative ability of DNN.

- How to achieve the good initialization with suitable properties: A neuron campaign algorithm

Future work

Anyway, this work is still ongonging. The experiments are only conducted on the toy dataset like MNIST and the basic model MLP. A lot of things need to do!

- How to extend to more broader used architecture like CNN, transformer

- Can we find other meaningful properties other than the mutual information

- Can these understanding help to learn more about the pretraining weight

- Can we have more theoritical guarantee

Looking forward to discuss with you guys!